I was in some sort of American campus, one of those where they found a gimmick to show how cool they are. In this case it was reptiles. Alligators were moving on the side of the street, right next to people. They would hiss or even try to bite if you got close enough. I was moving towards the exit, which was close to the sea and leading into a sort of beach, and I was wondering what would happen if one of these large three meter animals would bite someone. And suddenly something happened. Something large, much larger than a crocodile, came out of the water and snapped a fully grown adult alligator like a stork snatches frogs. What was that thing? A family of three was watching, fascinated, moving closer to see what was going on. "Are these people stupid?" I thought.

Sure enough, the dinosaur thing bites through the little kid. The father jumps to help but is completely ignored, his sudden pain and anguish irrelevant to the chomping reptile. Other tourists were being attacked. One in particular drew my eyes: he had one of the things pulling with its teeth on his backpack. The man acted like something annoyed him, making repeated "Ugh!" sounds while trying to climb back on the walkway. His demeanor indicated that he didn't care at all of the reptiles, temporary annoyances that stopped him from returning to normal life. At one moment he paused to scratch an itch on his face. His fingers were bitten clean off, blood gurgling from the stumps. He was in shock.

Somehow, a famous actor jumped from somewhere and made it clear that it had been just a show, although the realism of it was so extreme that I felt an immediate cognitive dissonance and the scene switched.

I was at the villa of a very rich friend and there was wind outside. Really strong wind. Nearby wooden booths that were at the edge of his garden were pushed towards me, threatening to crush me against the wall of the building. I went inside, telling my friend that he should take whatever he needs from outside because the wind is going to tear them away. He calmly pressed a button on a remote and an inflatable wall grew around the compound, holding the wind at bay. Cool trick, I thought, trying to figure out if it was firmly anchored to the ground by wires or it got all its structural strength from the material it was made of. It had to have some sort of Kevlar-like component, otherwise it would have been easy to puncture. I calculated the cost of such a feature as astronomical.

We went in, climbing to the second or third floor. Out the window I saw buildings, like near the center of Bucharest. The wind was wreaking havoc on whatever was not firmly fixed. The building across the street was 10 floors tall and apparently someone was doing roofing work when the wind started. The fire used to heat up the tar was now really intense from all the fresh oxygen and smoke was billowing strongly. Suddenly the top floor caught fire, flames stoked by the wind into unstoppable blazes. I called my friend to the window and showed him what was going on. While we watched, floor after floor were engulfed in flames, explosions starting to be heard from within. The building caught fire like a cigarette smoked in haste. I thought that if the wind went through the building, via broken windows and walls, then the a very high temperature could be reached. And as I was thinking that, the building went down. It didn't collapse, instead it leaned towards our villa and crashed right next to it.

I panicked. I knew that on 9/11 the towers collapsed because of the intense heat, but a nearby building was structurally weakened by the towers falling and it too went down eventually. I ran towards the ground floor, jumping over stairs. If the villa would crumble, I did not want to get stuck inside. While fleeing, I was considering my options. The building that went down had people in it, maybe I should head right there and help people get out. Then I also remembered the thousands of people helping after the September 11 that later got lung cancer from all the toxic stuff they put into buildings. Besides, what do I know about rescuing people from rubble? I would probably walk on their heads and crash more stuff on top of the survivors. I've decided against it, feeling like an awful human being that is smart enough to rationalize anything.

As I got down I noticed too things. First there was a large suffocating quantity of white big grained dust being blown by the wind, making it hard to breath. The light was filtered through this dust, giving it a surreal dusky quality. I used my shirt to create a makeshift breathing mask for the dust. Then there were the people. A sexy young woman, short skirt, skimpy blouse, long hair, the type that you see prowling the city centers, was now crawling on the ground, left leg sectioned just under the knee, but not bleeding, instead ending with a carbonized stump, like a lighted match that you put off before it disintegrated. I saw people with their skins completely burned off, moving slowly through the rubble, reaching for me. Some where nothing more than bloodied skeletons, some were partially covered in tar, the contrast of red inflamed burns and black tar or burned clothing evoking demonic visions of hell. These people were crying in pain and coming towards me from all sides, climbing over the ruins of buildings and cars. The smell was awful. I ran, parkour style.

As I woke up I tried to remember as much of the dream as possible. I still have no idea what I was doing in that campus and how I had gotten there. I was fascinated by the scene with the shocked tourist, as it implies knowledge of human behavior that I don't normally possess when awake. It was completely believable, yet at the same time bizarre and unexpected. Great scene! I've had disaster dreams before, top quality special effects and all, but never was I confronted with the reality of the aftermath (I usually died myself in those). I am certain I made logical decisions in the situation, but at the same time I felt ashamed at the powerlessness, fear and the fact that I was running away. Should I have stayed and helped (and gotten cancer)? Should I have rushed home and blogged about it? Maybe a strategic retreat in which I would have conferred with experts and then maybe got back with professional reinforcements?

What felt new was that while having all those random thoughts and running, I wasn't really thinking of my life. I wasn't considering whether my life is worth saving or what the purpose of my running actually is. It wasn't thinking at all; just running. It was visceral, like my body was on autopilot, taking that stupid head away from the danger before he thinks itself to death. Considering it was a virtual body in my actual head, that's saying something. I am not just sure what.

Anyway, beats the crap out of the one with being late for the exam, not having studied anything...

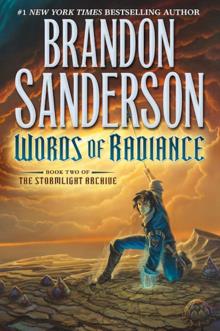

Brandon Sanderson does not disappoint with the sequel to Way of Kings. Quite the opposite, in fact, weaving more and more into the vast tapestry that is the world of the Stormlight Archive series. The characters converge towards a point in time and space where everything and anything will be decided, the fate of the entire world, with just a few courageous people standing between life and complete desolation.

Brandon Sanderson does not disappoint with the sequel to Way of Kings. Quite the opposite, in fact, weaving more and more into the vast tapestry that is the world of the Stormlight Archive series. The characters converge towards a point in time and space where everything and anything will be decided, the fate of the entire world, with just a few courageous people standing between life and complete desolation.