There is this old joke about how having a senior developer around is like having a little child sitting next to you. "How do I do that?", you ask. And the SD asks "Why?". "Well, the boss asked me to" "But, why?. "The client wants it" "Why?". "Because he needs this other thing" "Why?". I am afraid the joke is very true. Usually, at the end of the exchange you get to the real need at the base of the request and understand that it is the thing that must be fulfilled, not the request as it came through several filters, each adding or removing from the real purpose of the task. And if there is something I want to impart from my extensive experience as a software developer it is that you must always start from the core need and go from there.

However, I am not here to discuss how to write code, for once, but on how to organize your team (or yourself as a team of one) from this need driven perspective. And please don't call it NDD and read it NEED or whatever, because this is just a general principle that can help you in many domains. So let's analyse

Agile. It's been done to death, so what I am trying to do here is not summarize what others have done, but to simplify things until they don't even need a name anymore.

First, what is Agile Development? Wikipedia says:

"Agile software development is an approach to software development under which requirements and solutions evolve through the collaborative effort of self-organizing and cross-functional teams and their customer(s)/end user(s). It advocates adaptive planning, evolutionary development, empirical knowledge, and continual improvement, and it encourages rapid and flexible response to change. The term Agile was popularized, in this context, by the Manifesto for Agile Software Development. The values and principles espoused in this manifesto were derived from and underpin a broad range of software development frameworks, including Scrum and Kanban." So Agile is not Scrum, but Scrum is underpinned by Agile principles and values. That is important, because many shops want to do Agile and so they do Scrum or Kanban and they either try to follow them to the letter (purists) or adapt them to their requirements (which is more in line with Agile principles, but the implementations usually suffer). One wonders how come there are not a lot of different methodologies out there, just like there are software patterns or programming languages. The answer is that no one starts off with understanding their need for Agile and in fact many don't even know or care what it is. They do Scrum so that they get the Agile badge, they tell everyone they "do Agile" and pompously ask their candidates if they "know Agile".

But if someone asks "Why do you want to do Agile?" the answers are not so easy to get. Of course there are advantages in the approach, that is why it's so popular, but you don't get advantages for your collection, they need to drive you to some kind of goal. One might say that for every process you need metrics in order to analyse your progress. Scrum does that. You don't need metrics for the efficacy of the metric system, do you? But still you need some way of gauging the usefulness of your methodology itself, otherwise we would still be using

imperial units.

I am more accustomed to Scrum, but I don't want to analyse it, instead I want to measure the usefulness of Agile as a concept by asking the very simple question "Why?". As we've seen, its attributes include:

- self organizing teams

- cross functional teams

- collaborative effort between teams and customers

- adaptive planning

- evolutionary development

- empirical knowledge

- continual improvement

- rapid and flexible response to change

Why self organizing teams? That's the first and one of the most important and complex aspects of Agile. If the team self organizes, then everyone in it shares responsibility. There is no need for micromanagement from above, the team acts as a unit, you get it as a neat package that you don't care about. In theory when a team is inefficient from the organization's point of view, you should fire the team, not individual people. If one member is not performing as expected, then it's the responsibility of the team to fix them or get rid of them. Also theoretically, an Agile team doesn't need a manager.

But in practice I haven't seen this implemented except in small startup teams. There is always a manager, a director or more of them. There is always micromanagement. There is always some tendril of HR or organizational graph that affects people individually. Why is that? Because teams are cross functional, too. A developer doesn't need or want in any way to have to measure the work efficiency of their colleagues, have to tell them how to do things or get to the point where they have to push for firing them. A developer doesn't want to administrate the team. So what is a manager in the Agile world? That's right, a member of the team. If devs would not have a manager, they would probably hire one, as part of the self organization of the team. Moreover, for teams inside larger organizations like corporations, the "customer" is the corporation, so the manager role is often combined with the one for "customer representative" or "product owner" or "producer" or something like that. They're the people you go to in order to understand what it is you have to do.

And since I've started talking about

cross functional teams, this means that Agile doesn't need or indeed recommend that people be interchangeable, having the same skill sets. Whenever you hear someone say "everyone should know everything" that's not Agile. It's just a sign that someone from above wants to micromanage the team without having to know anyone in it or ever decide the direction it should work in. It's the sign of a top-down approach that is ultimately inefficient from both directions: the team feels pressure it doesn't need while being restricted in what it can do and the management has to perform tasks that they don't need to do. I haven't seen one team of people treated as interchangeable units be efficient, nor have I heard of any. So why cross functional teams? Because people are individual beings and are different and, for every skill they have, they put in effort to first reach a basic level of knowledge, then more effort to become good or even expert at them. An Agile team needs to be flexible enough to accommodate for any type of person, within reason.

As an aside, think of the skills of a challenged person. The legislation of many countries requires now that software be accessible to a wide variety of disabled people. So whenever you hear that people should be versed in all aspects of the team's business ask them for Braille courses.

Cross functional also includes the skill of knowing what the customer wants, whether they are a member of your organization or someone sent by the client. It's one of the most necessary roles to be filled in a team. If a team were a living thing, the product owner would be the eyes and ears. You can be strong, fast, deadly, but it doesn't help if you can't find your prey.

So why collaborative effort between team and customers? Because you need to know why do you what you do. This entire post is dedicated to the question of why, so I won't press this. Just remember that if the team doesn't know why something is required or necessary, then it is not yet fit to perform that task. People are going to hate me for this, but we've already established the need to understand the client and that in large organizations the customer is the organization, so the logical conclusion is clear: office politics, psychopathic displays of power, idiotic decisions coming from up high are all part of the requirements. There is a caveat, though: the need of the customer needs to be clear, not the want. If the liaison to the customer is withholding or unaware of the real reasons something needs to be done, then the team suffers.

As an aside to all manager or high corporate types out there, it's way better to explain to a team that they have to scrap a project because you hate the guts of the person who proposed it, than to invent business reasons that are clearly bullshit for destroying months of loving work. In the first case you honestly admit you are an asshole, but in the second you prove you are one.

Now, what's this about adaptive planning? If you already know what is needed, someone should let you do your job in peace. You come to them when it's done. That was the fallacy of the

Waterfall system. That is also why Waterfall still works. In a situation where needs are clear and do not change, there is no need for agility. Unfortunately, these situations are rare, especially since I've included in the list of customer needs the legacy of us being descended from apes. That means adapting quickly, without regret, changing direction, sometimes turning back completely, abandoning projects, starting new ones that you recommended starting years ago and was laughed out from the room for it and so on.

It's not only that. Adapting to your client's need is something that you would have to do anyway, in any realistic scenario. But it also means that you have to adapt to the technological changes in the world. Maybe you have to rewrite your code with another technology or explore new ones that just appeared out of thin air. Adaptation means that a big part of your job as an Agile team is exploration. This is emphasized by the

empirical knowledge attribute above. These two go hand in hand. You adapt by understanding and for this you must be in the loop, you need to know things. Well, one of the members of the team needs to, at least. Adapting means "rapid and flexible response to change".

Therefore, whenever someone asks you if you are Agile or demands that you are, you can tell them about all the new tech that is rumored to come out this year and how you can barely contain your enthusiasm to be working for someone who enables exploration in the true spirit of Agile. Preferably after you've signed a contract, maybe.

But I talked about adapting to new requirements and changes in the environment, not adaptive planning. Adaptive planning is planning for all the things above. In other words, when you start a task, plan for the possibility of it changing, having to be abandoned or having to be redone in a different shape. And here is a point of much contention, because you can do this as any level. If you do it whenever you have to change the color of a button, you will overestimate all your work. If you do it at the level of the entire enterprise suite of products, you might as well do Waterfall. In any respect, it is the entire team that needs to know and decide what they plan for drastic changes.

Yet there are two types of reacting to change: planned or chaotic panic. Adaptive planning refers to planning for change, not preparing for working in complete chaos. That is why Scrum, as an example, splits planning into small periods of time (2-4 weeks) called sprints for which the work planned needs to be finished. If anything drastic (like a task losing its relevance) happens, the sprint is aborted. The sprints themselves can be in relative chaos, but inside one planning and order prevail. It doesn't mean planning for an irate boss man to come and tell you every day to change this and that. It means having a list of tasks that you can edit, whether by removing or adding tasks, rearranging their priority, or even adding tasks like "how to deal with this insane man coming into the office and spouting obscenities at me", then execute them in order. In no way does it mean that you do things by the ear or plan for something and then ignore it.

Two aspects remain to be discussed: evolutionary development and continuous improvement. Both strongly imply awareness of the development processes through which the teams goes. It's the team equivalent of introspection commonly referred to as retrospective.

What did we do? How does it compare with what we had planned? What does it mean for the future? How can we do better? and the Why of it is obvious, you've got a good thing, how can you get more of it? Yet this is the point that is hardest to put in a box. Various methodologies attempt to introduce metrics to measure progress and performance. Then, with the function of the team properly computed and numbers assigned to its yield, one can scientifically tweak the processes in order to get the most out of the resources the team has. This is a wonderful concept and I agree with it in spirit, but in practice frankly, I think it's bollocks. It pure shit. It's circular logic.

Here we are, discussing a beautiful philosophy of doing work for maximum benefit by letting a team of very diverse people organize themselves towards a common goal, being ready for anything, adapting to everything, pushing through no matter what by leveraging their individual strengths. And then we come and say "Wait, I can take all this, name it, measure it, put it into equations and then tell you how to improve it!". Well, why didn't you do that from the start, then? Why bother with all the self expression and being able to adapt to anything new? If all is old under the sun, why do Agile at all?!

I believe this is the part where Agile methodologies fail utterly and miserably and can't even admit to themselves that they have. I agree that progress needs to be visible and the ability to compare it with other instances is essential, but from here to measuring it numerically is a large distance. Forget the usual arguments against measuring team success: the team composition is not fixed, the type of work it different, team dynamics means people behave differently, personal and local events, and so on. It's bigger and simpler than that. I submit that since the entire concept of Agile rests on the principles of flexibility, the method of gauging success should also be flexible and especially the way the team processes are tweaked for the future.

And I have proof. In every company I've ever been or heard of the Agile methodology of the place didn't quite work in various ways and that was apparent when people were laughing or disrespecting that part to the point of ignoring the rules in place. And the common bit that didn't work for all of them is the retrospective. The idea of asking "what problems did we face?" and then immediately finding and enforcing solutions with "what can we do about it next time?" has become such a mantra that it is impossible to ask these questions separately. It's become reflex (to the horror of wives everywhere) when you hear "there is a problem" to ask immediately "what can we do to fix it?". There is an intermediate question that must be inserted there: "Is this really a problem or do I just have to be aware it happened? Can we ignore it?". This is another topic, though.

A short summary would be:

- responsibility is shared between all the members of the team and decisions should be taken as a team

- any member of the team is valued for their contribution towards reaching the team goal

- the manager is not your boss, it's just the role of a member of the team

- the Scrum master or Kanban master or whoever does the accounting in whatever methodology you use is still a member of the team

- having a member of the team understanding the needs of the customer is essential; they are the people you go to ask "Why?" all the time

- adaptation need to be written in the DNA of the methodology; the team must plan to change its plans, but still do things orderly

- experience should guide the team to define progress and improve itself

- transparency of the team goal is necessary for the team's success

- there is no improvement of the development method (or the code) without exploration and the entire intentional participation of the team

- never be afraid to ask why; if you work in an environment where you are afraid, consider changing it

Let me tell you the typical story of the development team. First there is chaos, someone decides they are a quick and dirty team and they can do anything, fast, cutting all corners into a straight line to hell. Mistakes are made, blame flies around, people are fired, emotions get high, results go nowhere. There is need for order, all cry out, we need to use the holy grail of software development and the greatest human invention since fire (that week, at least). And then a specialist is called. He either becomes part of the team, with the role of teaching and enforcing rules, or just teaches a few courses, gets their money and leaves before hapless fools try to put in practice whatever it is they thought they understood. The result is the same: developers first like it, then start grumbling about the indecency of having to fill out documentation before any code is written, or updating it, or adding tasks in whatever software is used (or Post-Its on a whiteboard, if you are a purist and still haven't figured out developers can't use a pen to write). So either morale plummets and the operations continue with diminishing results, or the grumbling becomes a rallying cry to make a change. And the change is... chaos again.

In short, just like a revolutionary South American country, they can't decide if they want freedom or dictatorship, because both options have big damn flaws and anything in between is sucking their life away.

But why?

It's because even methodologies are subject to change, to human whim, to altering conditions. If you look at the beginning of this enormous post, it all started with the main goal and meandered from that, just like an Agile methodology in a real firm does. What is the goal of the team? And don't you look at your manager to tell you. As a team, responsibility is shared, remember? If the purpose is to turn perfectly enthusiastic software developers into soulless drones... you should run away from there. But if it is not, think about the things you are doing. Are they helping or are they not helping? This week at least.

In conclusion (or retrospect?) I believe Agile is a great idea, but its underpinning principles of always being aware of the situation and adapting to it are a more general and useful concept to remember and live by. Truth and knowledge comes before adapting and many software shops fail there, so the methodology is irrelevant. Then the method of work chosen should reflect the flexibility it enables. I don't believe in a pure anything, and certainly not pure Scrum, pure Kanban, pure Agile, but whatever you cook up, it should work towards the real goal of the team, as known and acknowledged by everyone in it.

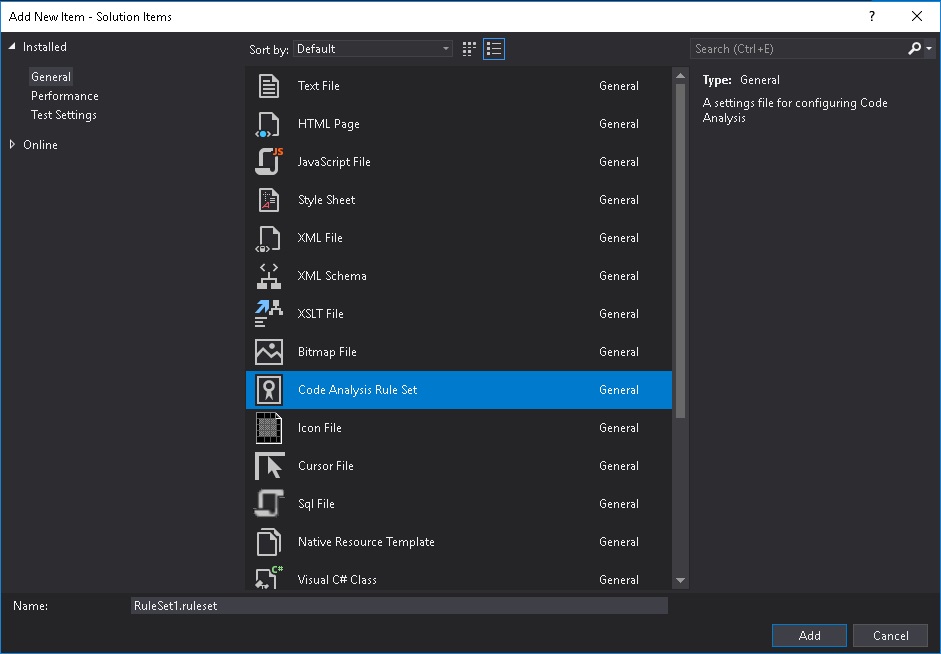

What's your function in life?  Update: there is an issue related to NuGet packages. I was recommending to run MsBuild with the command line option /t:Restore;Rebuild which should restore packages and rebuild the solution. However, as detailed here, the MSBuild Restore option only restores packages defined in the project PackageReference elements, not the ones in packages.config. In order to restore those, you still need to manually run nuget restore. Where do you get nuget.exe from? Obviously not from the Visual Studio Build Tools... but from the NuGet Gallery.

Update: there is an issue related to NuGet packages. I was recommending to run MsBuild with the command line option /t:Restore;Rebuild which should restore packages and rebuild the solution. However, as detailed here, the MSBuild Restore option only restores packages defined in the project PackageReference elements, not the ones in packages.config. In order to restore those, you still need to manually run nuget restore. Where do you get nuget.exe from? Obviously not from the Visual Studio Build Tools... but from the NuGet Gallery.