When refactoring is bad.

People who know me often snicker whenever someone utters "refactoring" nearby. I am a strong proponent of refactoring code and I have feelings almost as strong for the managers who disagree. Usually they have really stupid reasons for it, too. However, speaking with a colleague the other day, I realized that refactoring can be bad as well. So here I will explore this idea.

People who know me often snicker whenever someone utters "refactoring" nearby. I am a strong proponent of refactoring code and I have feelings almost as strong for the managers who disagree. Usually they have really stupid reasons for it, too. However, speaking with a colleague the other day, I realized that refactoring can be bad as well. So here I will explore this idea.Why refactor at all?

Refactoring is the process of rewriting code so that it is more readable and maintainable. It does not mean writing code to be readable and maintainable from the beginning and it does not mean that doing it you accept your code was not good when you first wrote it. Usually the scope of refactoring is larger than localized bits of code and takes into account several areas in your software. It also has the purpose of aligning your codebase with the inevitable scope creep in any project. But more than this, its primary use is to make easy the work of people that will work on the code later on, be it yourself or some colleague.

I was talking with this friend of mine and he explained to me how, especially in the game industry, managers are reluctant to spend resources in cleaning old code before actually starting work on new one, since release dates come fast and technologies change rapidly. I replied that, to me, refactoring is not something to be done before you write code, but after, as a phase of the development process. In fact, there was even a picture showing it on a wheel: planning, implementing, testing and bug fixing, refactoring. I searched for it, but I found so many other different ideas that I've decided it would be pointless to show it here. However, most of these images and presentation files specified maintenance as the final step of a software project. For most projects, use and maintenance is the longest phase in the cycle. It makes sense to invest in making it easier for your team.

So how could any of this be bad?

Well, there are types of projects that are

fire and forget, they disappear after a while, their codebase abandoned. Their maintenance phase is tiny or nonexistent and therefore refactoring the code has a limited value. But still it is not a case when refactoring is wrong, just less useful. I believe that there are situations where refactoring can have an adverse effect and that is exactly the scenario my friend mentioned: before starting to code. Let me expand on that.

Refactoring is a process of rewriting code, which implies you not only have a codebase you want to rewrite, but also that you know how to do it. Except very limited cases where some project is bought by another company with a lot more experienced developers and you just need to clean up garbage, there is no need to touch code that you are just beginning to understand. To refactor after you've finished a planned development phase (a Scrum sprint, for example, or a completed feature) is easy, since you understand how the code was written, what the requirements have become, maybe you are lucky enough to have unit tests on the working code, etc. It's the

now I have it working, let's clean it up a littlephase. Alternately, doing it when you want to add things is bad because you barely remember what and who did anything. Moreover, you probably want to add features, so changing the old code to accommodate adding some other code makes little sense. Management will surely not only not approve, but even consider it a hostile request from a stupid techie who only cares about the beauty of code and doesn't understand the commercial realities of the project. So suggest something like this and you risk souring the entire team on the prospect of refactoring code.

Another refactoring antipattern is when someone decides the architecture needs to be more flexible, so flexible that it could do anything, therefore they rearchitect the whole thing, using software patterns and high level concepts, but ignoring the actual functionality of the existing code and the level of seniority in their team. In fact, I wouldn't even call this refactoring, since it doesn't address problems with code structure, but rewrites it completely. It's not making sure your building is sturdy and all water pipes are new, it's demolishing everything, building something else, then bringing the same furniture in. Indeed, even as I like beautiful code, doing changes to it solely to make it prettier or to make you feel smarter is dead wrong. What will probably happen is that people will get confused on the grand scheme of things and, without expensive supervision in terms of time and other resources, they will start to cut corners and erode the architecture in order to write simpler code.

There is a system where software is released in "versions". So people just write crappy code and pile features one over the other, in the knowledge that if the project has success, then the next version will be well written. However, that rarely happens. Rewriting money making code is perceived as a loss by the financial managers. Trust me on this: the shitty code you write today will haunt you for the rest of the project's lifetime and even in its afterlife, when other projects are started from cannibalized codebases. However, I am not a proponent of writing code right from the beginning, mostly because no one actually knows what it should really do until they end writing it.

Refactoring is often associated with Test Driven Development, probably because they are both difficult to sell to management. It would be a mistake to think that refactoring is useful only in that context. Sure, it is a best practice to have unit tests on the piece of code you need to refactor, but let's face it, reality is hard enough as it is.

Last, but not least, is the partial or incomplete refactoring. It starts and sometime around the middle of the effort new feature requests arrive. The refactoring is "paused", but now part of your code is written one way and the rest another. The perception is that refactoring was not only useless, but even detrimental. Same when you decide to do it and then allow yourself to avoid it or postpone it and you do it badly enough it doesn't help at all. Doing it for the sake of saying you do it is plain bad.

The right time and the right people

I personally believe that refactoring should be done at the end of each development interval, when you are still familiar with the feature and its implementation. Doing it like this doesn't even need special approval, it's just the way things are done, it's the shop culture. It is not what you do after code review - simple code cleaning suggested by people who took five minutes to look it over - it is a team effort to discuss which elements are difficult to maintain or are easy to simplify or reuse or encapsulate. It is not a job for juniors, either. You don't grab the youngest guy in the team and you let him rearrange the code of more experienced people, even if that seems to teach the guy a lot. Also, this is not something that senior devs are allowed to do in their spare time. They might like it, but it is your responsibility to care about the project, not something you expect your team to do when you are too lazy or too cheap. Finally, refactoring is not an excuse to write bad code in the hope you will fix it later.

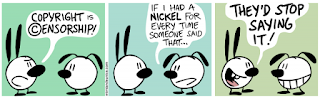

By the way I am talking about this you probably believe I've worked in many teams where refactoring was second nature and no one would doubt its utility. You would be wrong. Because it is poorly understood, the reaction of non technical people in a software team to the concept of refactoring usually falls in the interval between condescension and terror. Money people don't understand why change something that works, managers can't sell it as a good thing, production and art people don't care. Even worse, most technical people will rather write new stuff than rearrange old stuff and some might even take offense at attempts to make "their code" better. But they will start to mutter and complain a lot when they will get to the maintenance phase or when they will have to write features over old code, maybe even theirs, and they will have difficulty understanding why the code is not written in a way in which their work would be easy. And when managers will go to their dashboards and compare team productivity they will raise eyebrows at a chart that shows clear signs of slowing down.

Refactoring has a nasty side effect: it threatens jobs. If the code would be clean and any change easy to perform, then there will be a lot of pressure on the decision makers to justify their job. They will have to come with relevant new ideas all the time. If the effort to maintain code or add new features is small, there will be pressure on developers to justify their job as well. Why keep a large team for a project that can easily accommodate a few junior devs that occasionally add something. Refactoring is the bane of the type of worker than does their job confusingly enough that only they can continue to do it or pretend to be managing a difficult project, but they are the ones that make it be so. So in certain situations, for example in single product companies, refactoring will make people fear they will be made redundant. Yet in others it will accelerate the speed of development for new projects, improve morale and win a shit load of money.

So my parting thoughts are these: sell it right and do it right! Most likely it will have a positive effect on the entire project and team. People will be happier and more productive, which means their bosses will be happier and filthy richer. Do it badly or sell it wrong and you will alienate people and curse shitty code for as long as you work there.