Learning chess using Lichess Interactive Studies

Quick update

I have solved a lot of the issues I have with LiChess with my own Chrome/Edge extension: LiChess Tools. I am very proud of it and I invite you to use it. It even enhances the Interactive lesson mode I describe below to explore ALL variations in the chapter!

Now back to the original post:

Intro

It's impossible to play chess and to not have heard of Lichess. It's a website that has started with the lofty goal of providing a completely free and without ads place where people can play chess. One of the features there is called a Study, similar to the chess Analysis board, but allowing for multiple chapters, persistent comments and annotations, a unique URL as well as the possibility of embedding it into a web page.

There are multiple analysis modes for a chapter: Normal analysis, Practice with computer, Hide next moves and Interactive mode. I think Hide next moves is mainly used for embedding chess puzzles into websites, while Practice with computer is a mode that I have not played with yet. Normal analysis presents the classic board with various tools showing you the moves people of various levels play in a position, a chess analysis engine in the browser as well as a server side analysis that you can run on the main line of a PGN.

However, I am here to show you how to use Interactive lesson mode, simply and without confusion, to quickly improve your game and perfect your play.

If want the example study and only then read through the documentation about how it was done, go directly to the Demo.

It's not that

When I first heard of this option I was elated. I expected to take my very complicated PGN explorations, paste them into the study, then have the computer play the other guy based on the moves in the PGN. And while I still hope the developers of Lichess will create a study mode for a complete PGN, this does not work in Interactive lesson mode yet. I hear that Tarrasch UI does have an option like that, but I haven't used it yet, so maybe I will update this post after I try it. I use Arena Chess GUI as the tool of choice for game analysis on my computer.

Edit: I've installed Tarrasch and it kinds of works, even if the option is rather primitive. What you do is you take a PGN (like for example the Lichess PGN of all of your games or of another player) and set it up as the opening book. Then you have another option that sets up how many moves to take from the book and then, what percentage of moves to take from the book. So setting the first as a very large number or the percentage at 100 makes the UI play exactly like the other player. However, the problem is that it doesn't save the probability for a user to make a move. It just combines all games PGNs into one big one and plays from it. If I player e4 once and d4 1000 times, the computer will play either 50% of the time. Bummer!

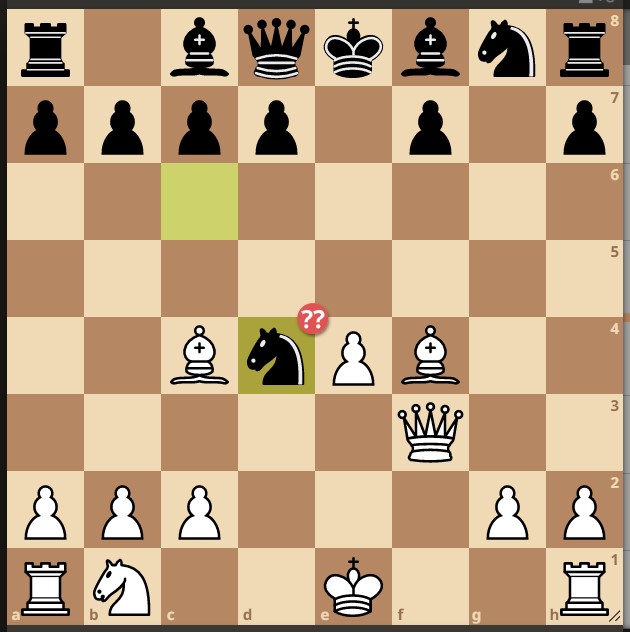

OK, so maybe it follows the main line, but what if I go into a branch line? Surely it will allow me to continue, because I have marked the good moves as good and the bad moves as bad. Nope! The main line is the only line the Interactive lesson will follow, but you can add other moves which will be automatically considered bad and their comments shown to the user as they try to make them.

It is called interactive, so maybe it has all kinds of whistles and bells that I can add so that it is more like a fun game! Again, no. Interactive means only that you can learn a specific line by following it ad nauseum, with some helpful graphical hints, comments and annotations baked in. For each line that you want to explore, you have to build a different chapter. And there are a maximum 32 chapters per study. There is no way in which you are making a different move and you get any feedback more meaningful than a prerecorded comment telling you you didn't play the correct move. That being said, when creating a new chapter one can import a PGN containing multiple games. This will create a chapter for each of the games (again, maximum of 32).

One other glaring limitation of studies in general is that they barely work on the mobile app and Interactive lessons are not even supported there.

But it's that

But once I found the proper way of using it, I realized that it can actually help me a lot to improve my game. Why? Because it helps with repetition and memory, which is something that I don't really excel in. So here are my recommendations on how to use the tool.

Create a PGN with all the lines that you want to explore

Yes, I know I said Interactive mode doesn't handle multiple lines, but this is the starting point of your efforts. You need one anyway to first determine what you want to study. Let's say you have learned of the newest tricky gambit line and you want to beat all your friends with it, but you can't practice it without letting them in on it.

There are multiple ways of generating this source PGN. You can watch your favorite YouTuber going through the variations, create a study and follow their moves in the first chapter. You can start from the Analysis Board and transform it into a Study when you need to add comments and stuff. Don't worry about chapters at this stage. Just build your PGN. You can take your own games in that particular opening or variation and add them to the PGN. You can check out the games of other people or use the Opening Explorer and Tablebase to find the most common moves people play from a position.

Do add comments and graphical hints as you go along (right click and drag the mouse for arrows, right click on squares for circles, press combinations of Ctrl and Alt for various colors). It will be important later.

Split your PGN into individual lines

This might feel painful at the beginning, but in order to examine lines from your PGN interactively you need to remove all other moves. You already have your PGN, just add new chapters for each line and use the option to copy from the first chapter. You do this by selecting the chapter you want to copy from, then use the new chapter option. Don't forget to choose Interactive lesson as the Analysis mode (you can copy from the latest added chapter, since it's identical to the first, only it has the Interactive lesson mode already selected). Let's say that you started with a small PGN with a main line and an alternate branch that then splits again later on. That means 3 variations. Copy the PGN into 3 new chapters, then for each one delete all the moves from alternate branches. Keep the first chapter with the compete PGN and the type Normal analysis.

Play chapters repeatedly

In order to play the chapters yourself, you need to press the Preview button. Alternately, open your study in a browser where you are not logged in on the Lichess site, but that also implies that your study is set to public and updating it as you play will be cumbersome.

So start playing the chapters, in order or randomly, again and again. You will start to reap the benefits of spaced repetition, without the stress of playing against another person and without getting distracted by other stages of the game.

Eventually, you will notice some moves that are hard to remember. Exit preview mode by pressing the Preview button again, then add hints, comments, graphical hints, etc. This will help you when you get to the same spot a few weeks later.

Helping yourself and others

There are several ways to nudge people going through the lesson. Most are helpful, but they can also be detrimental or too revealing.

You can add a Hint, which will appear if the user gives up and clicks on Hint. They also have the option of seeing the next move, if they really give up. I guess this can be helpful if the hint is vague enough. Something like "move to e4" is the same as the option of seeing the solution, so pointless. Something like "move the queen!" is not much better. However, something that talks about the principles of the position rather than the specifics not only helps cement the theory in the mind of the student, but also helps you, the author, clarify those principles as you search for the correct hint!

You can make a move for your side then add a special type of comment that will pop up to the user if they play any other move. You can also play different moves in the PGN other than the main move, then add a comment for why that move is not the right one, which will supersede the generic one. For example you could use "Not that move, dummy!" which is not very helpful, but works for a generic message, then play the next best move (or perhaps even a better one, depending on who you're asking) and commenting on it "That's an even better move, but not in the spirit of this opening" or something like that.

Note that you can add comments for the moves of your side, but that forces the student to press Space to go to the next move, which might get annoying. Alternately, if you write comments on the opponent moves, the annoying pause does not happen, since it's your time to move and you can read it at your leisure.

Same ideas apply to arrows and squares. You might use them to convey general plans or the very specific plans that follow the very last move in the chapter, perhaps. Show too much and you guide your user towards the move, preventing them from learning. Be consistent with your colors. I personally use the default green for future moves, blue for intentions or plans, red for what the pieces are attacking and yellow as the best move that the opponent should have played, but they did not.

Annotations are also very helpful, showing the student that the move is good or interesting or brilliant. They come with no extra information and do not pause the lesson.

Extra tools

There are several things that I found irritatingly missing from the Study feature. However, I've built my own solutions using CJS and Stylish Chrome extensions that allow me to run custom JavaScript and add CSS styles on specific sites. I would still prefer to have those implemented by the Lichess developers, though. I plan a future blog post about those tools, let me know if you're interested.

One of the tools is knowing what the last moves of variations are. On a large PGN it is hard to see which is which. One needs to know where variations end, whether to further them along or to at least end with a comment. My script adds a CSS class to the last item in a variation and another class if it has no comment attached to it. I see them as brighter and underlined if not commented.

Another one is handling transpositions. Chess masters of course look at the board and immediately recognize the position they had in a game three years ago when they were playing Magnus Carlsen, but regular people who are trying to cover all the branches of a PGN do not know whether they have reached a position they had before. My script adds a CSS class to all moves that show the same position as the currently active move, but it's not perfect, it only shows the same position that was reached through a different order of moves, not different moves.

Extracting a line from a PGN would have come handy as well. There is the option to make a variation the main line, but there is no option of removing all branches from it. That will make your initial study creation a bit cumbersome, especially since you are like me, accumulating lines in the PGN and then realizing there is no support for 523 chapters in a study.

Merging PGNs is also a very good option that as far as I know Lichess is missing. There is the option of adding any game from the Opening explorer and tablebase, but none for your own games or any other random ones. That would also help a lot with studies.

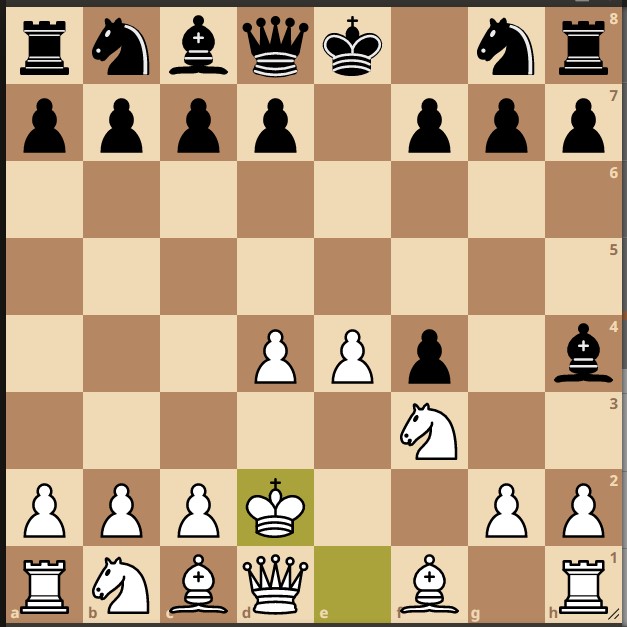

Demo

Below I will share one of my own interactive studies, public and shareable, hoping I will help you guys use this wonderful tool towards great effect. I will use for inspiration one of GM Igor Smirnov's YouTube videos. He is great, but I also used this because he usually shares his lichess studies :)

So these are the steps I followed to create the study:

- I took the two games in the study shared by Igor and manually merged them in a single PGN

- I created a new study, then pasted the PGN as the first chapter

- Doctored the PGN so that I eliminate the transposition at move 4

- I watched the video and added new moves from it

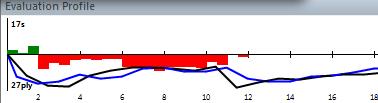

- I looked for interesting positions in the two games and explored some branches, adding comments related to everything from my own opinions to the evaluation Stockfish gives

- Created a new Interactive lesson chapter for each important variation

- Went to each chapter and upgraded the specific variation as main line

- Removed all side lines in each chapter (perhaps leaving the starting move only, so I can mark it with a special reference to its specific chapter)

- Played each chapter again and again, trying to identify the hard to find moves and the general plans of the opening

- Shared the study with all of you!

Here is the result:

What to do next

At the beginning, going trough the study, you will first learn the moves, maybe find some places requiring hints or nudging in the right direction. But then you will start to play actual games, using what you've learned, and you are going to get opponents that move completely differently from the main moves. Obviously they are doing something wrong, but you don't know how to punish them.

So here is what you do: you update the original PGN if you want, but then you create a chapter for each of these troublesome lines. You follow them through, using chess engines or some other way of understanding the position: maybe a teacher, or looking at master games or checking the moves statistically played most in that position. Then go back to going through the chapters.

It's that easy. This replaces your passive examination of your games with the active searching for a solution and an even more active playing through it again and again.

Conclusion

One can use the Interactive lesson mode to do spaced repetition learning, get a feel for new openings or rehearse the positions that give them the most problems. While the current implementation of the feature is very useful as it is, some simple additions would make it much more user friendly. I am still searching for additional tools that would complement this and will update the post as I go along.

Hope it helps!