This is something I have been hitting my head on from the beginning of my programming career: just find the best match in a table for each row in another table through a single query.

There are solutions, but they are all very inefficient. To demonstrate the issue I will start with a simple structure: tables A and B, having the same columns id, x and y. I want to get, for each point in table A defined by the (x,y) coordinates, the closest point in table B. I only need one and it doesn't need to be exclusive (other points in A might be closest to the same point). It doesn't even have to be one row in B for each row in A, in case there are two points at the exact same distance to a point in A. The creation of the structure is done here:

CREATE TABLE A(id INT PRIMARY KEY IDENTITY(1,1), x FLOAT, y FLOAT)

INSERT INTO A (x,y) VALUES(10,20),(20,30),(20,10),(30,20),(30,20),(10,30)

CREATE TABLE B(id INT PRIMARY KEY IDENTITY(1,1), x FLOAT, y FLOAT)

INSERT INTO B (x,y) VALUES(11,20),(20,31),(21,10),(31,21),(30,20),(11,30)

To find the distance from A to the closest point in B is trivial:

SELECT a.id,

a.x,

a.y,

Min(( a.x - b.x ) * ( a.x - b.x ) + ( a.y - b.y ) * ( a.y - b.y )) AS

dist

FROM a

CROSS JOIN b

GROUP BY a.id,

a.x,

a.y

To get the id of the closest B point, not so easy.

The first naive solution would be to just find the row in B that corresponds to each row in A using nested selects, like this:

SELECT *

FROM a

JOIN b

ON b.id = (SELECT TOP 1 b.id

FROM b

ORDER BY ( a.x - b.x ) * ( a.x - b.x ) + ( a.y - b.y ) * ( a.y - b.y ) ASC)

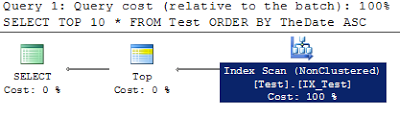

Looking at the execution plan we see what is going on: 86% of the query is spent on "Top N Sort".

Let's get some other solutions so we can compare them in the end in the same execution plan.

Another solution is to just use the result of the query that computes the distance and just join again on the distance. That means we would compare each row in A with each row in B twice, once for the computation of the MIN function and the other for the join:

SELECT j.*,

b2.*

FROM (SELECT

a.id,

a.x,

a.y,

Min(( a.x - b.x ) * ( a.x - b.x ) + ( a.y - b.y ) * ( a.y - b.y )) AS m

FROM a

CROSS JOIN b

GROUP BY a.id,

a.x,

a.y) j

INNER JOIN b b2

ON j.m = ( j.x - b2.x ) * ( j.x - b2.x ) + ( j.y - b2.y ) * ( j.y - b2.y )

Something that does the same thing, essentially, but looks a little better is joining the table A with B and then again with B on having the point from B2 be closer to the one in B1, but then adding a condition that there is no B2 (in other words, B1 is closest):

SELECT a.*,

b1.*

FROM a

CROSS JOIN b b1

LEFT JOIN b b2

ON ( a.x - b1.x ) * ( a.x - b1.x ) + ( a.y - b1.y ) *

( a.y - b1.y ) >

( a.x - b2.x ) * ( a.x - b2.x ) + ( a.y - b2.y ) *

( a.y - b2.y )

WHERE b2.id IS NULL

None of these solutions scan B only once for each row in A. Their relative complexity is this: 75%, 11% and 14%, respectively. In other words, finding the minimum distance and then joining with the B table again on the points that are in exactly that distance is the best solution. However, given some assumptions and a weird structure, we can get to something that runs in half that time:

SELECT id AS Aid,

x,

y,

m % 100 AS bId

FROM (SELECT a.id,

a.x,

a.y,

Min(Cast( ( ( a.x - b.x ) * ( a.x - b.x ) + ( a.y - b.y ) * ( a.y - b.y ) ) AS BIGINT) * 100 + b.id) AS m

FROM a

CROSS JOIN b

GROUP BY a.id,

a.x,

a.y) j

These are the assumptions that must be true in order for this to work:

- The function value can be converted to a BIGINT without problems. (if the distance between points would have been subunitary, this would have lost precision)

- The maximum ID in table B is under a certain value (in this case 100)

- The converted function multiplied by this maximum number doesn't cause an overflow

Basically I am mathematically creating a container for the value of the function and the id of the point in B, computing the minimum, then extracting the id back from the value. Neat.

Another solution, one that makes most apparent sense, is using a feature that was introduced in SQL Server 2005: RANK. We rank the points in B to each point in A, based on our function, then we only get the first by selecting on the rank being 1. Unfortunately, this doesn't work as expected. First of all, you cannot use RANK in the WHERE clause, so you must select the rank first, then select from that selection to add the condition. This might mean horrid temporary data tables if tables A and B are huge. Also, after running the query, it appears it is slower than the one that joins on the minimum distance. Here it is:

SELECT aid,

bid

FROM (SELECT a.id AS aId,

a.x,

a.y,

b.id AS bId,

Rank()

OVER(

partition BY a.id

ORDER BY (a.x-b.x)*(a.x-b.x)+(a.y-b.y)*(a.y-b.y) ASC) AS rnk

FROM a

CROSS JOIN b) x

WHERE rnk = 1

Comparing all the solutions so far, without the first naive one, with the nested selects, we get these values:

- Mathematical container of function value and id: 14%

- Selection of the minimum distance to each point and then joining with table B for the second time to look for the point that is at that distance: 21%

- Joining twice on the same table with the condition that one is better than the other and that the better one doesn't actually exist: 29%

- Using RANK: 36%, most surprisingly the worst solution

The final solution, adding some more computation in order to get rid of constants and some assumptions thus becomes:

DECLARE @MaxId BIGINT

SELECT @MaxId = Isnull(Max(id) + 1, 1)

FROM B;

WITH q AS (SELECT A.id,

A.x,

A.y,

Min(Cast(Power(A.x-B.x, 2) + Power(A.y-B.y, 2) AS BIGINT) * @MaxId + B.id) AS m

FROM A

CROSS JOIN B

GROUP BY A.id,

A.x,

A.y)

SELECT id AS aId,

x,

y,

m % @MaxId AS bId

FROM q;

I am still looking and there is now a question on StackOverflow that attempts to get the answer from the community, so far with limited results.  It is a bit embarrassing not knowing this at my level of software development, but I was stunned to see other people, even more experienced than I, had the same lack of knowledge. Apparently Microsoft SQL Server converts empty or whitespace strings to default values when using CONVERT or CAST. So CONVERT(INT,''), equivalent to CAST('' as INT), equals 0. DATETIME conversion leads to a value of 1900-01-01. And so on. That means that a good practice for data conversion when you don't know what data you may be getting is to always turn whitespace to null before using CONVERT or CAST. Also, in related news, newline is NOT whitespace in T-SQL so LTRIM(CHAR(10)) and LTRIM(CHAR(13)) is not empty string!

It is a bit embarrassing not knowing this at my level of software development, but I was stunned to see other people, even more experienced than I, had the same lack of knowledge. Apparently Microsoft SQL Server converts empty or whitespace strings to default values when using CONVERT or CAST. So CONVERT(INT,''), equivalent to CAST('' as INT), equals 0. DATETIME conversion leads to a value of 1900-01-01. And so on. That means that a good practice for data conversion when you don't know what data you may be getting is to always turn whitespace to null before using CONVERT or CAST. Also, in related news, newline is NOT whitespace in T-SQL so LTRIM(CHAR(10)) and LTRIM(CHAR(13)) is not empty string!