Madeira is a beautiful island and, even if we went during the summer, I understand that it is even more beautiful during spring, when flowers bloom. Yet if you want to have fun, you must avoid the tourist traps with overinflated prices and know how the island is structured.

What is Madeira

But first things first: Madeira is a Portuguese island, even when it is clearly closer to Africa than Europe (latitude a bit under Casablanca and above Marrakesh). Like the Azores, its archipelago is an autonomous region, which means it kind of has its own local government, complete with a president, even if still part of Portugal and the European Union. Tourism is by far its greatest source of income (more than 70%) even if at the beginning of its history it provided sugar for most of the world and was also a vibrant place of commerce via ships and funded much of the Portuguese exploration and expansion era.

As a volcanic island, it's about 800 km2, but its highest elevation is 1861m. And while at a pretty southern latitude, it is surrounded by water, which gives it a distinct climate. Water evaporates around the island, then condenses on the slopes of its surface as a light mist. Rain is relatively rare, but the island has a lot of water through this system. Touristically, this has relevance as the locals have constructed kilometers of tiny cement canals to distribute the water falling down the slopes towards farmlands. Next to these there are paths that can be hiked at low slopes and under the forest shade. Shade is important, because while the climate is mild, rarely going above 30°C, the sun is relentless, so don't forget your hats and your solar protection.

The capital of the island is Funchal, home of a little more than a hundred thousand people. Considering the entire island population is a quarter of a million, you can see why most hotels, shops, restaurants, bars, clubs and beautiful buildings are there. This is also, I want to say, the least interesting part of the island. In about two or three days you can visit almost everything in the city proper, add one for things farther away like some gardens you can reach using cable cars and stuff like that, and you don't need more than four days. The rest of the island, though, has a lot of beautiful scenery, strange and different little villages, authentic housing, history and is generally more interesting. There is a caveat, though.

Take care

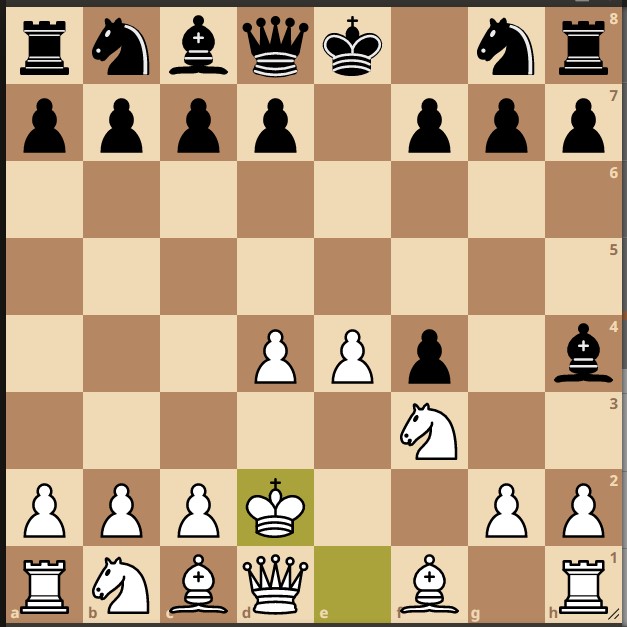

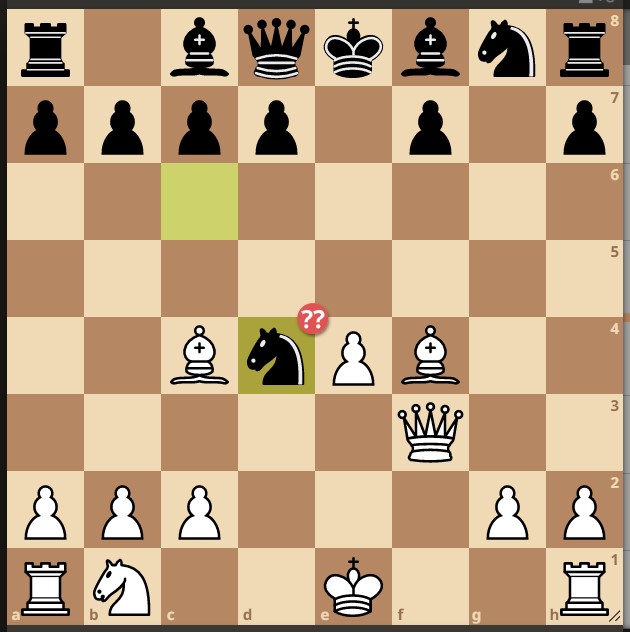

The roads in Madeira, even if new, very well done and paid for by the European Union, with kilometers long tunnels and good asphalt, are convoluted and very steep. It would be a terrible mistake, in my opinion, to come to the island and rent a car. There are roads where you can't use the break or your car starts to tumble. They are also not very wide, meaning that you have to know the unwritten driving protocol on the island and sometimes have to stop and go in reverse in a curved road that is 45 degrees steep. Luckily, there are a lot of buses you can take, even if they have pretty short schedules (around 18:00 you must start considering taxis or Bolt). BTW, Uber doesn't work in Madeira, so you need to install Bolt, which has the usual "car sharing" service, but also integrates with local taxi cabs. Also, how could you properly enjoy the landscape if in constant fear for your life?

Don't buy tickets for the Hop on/Hop off buses, as they come rarely (45 minutes to an hour) and their schedule ends at 18:00. The drivers also take lunch breaks, so the gap between getting off and getting back on again is two hours at that time. Local buses might look more daunting, but they are just as good and you can pay when you get in. Mind that they are also rare and you should pay attention to their arrival schedule, but there are a lot more lines.

Also, while I have not taken the opportunity to see how it is, sea tours (like whale and dolphin watching) might not be as good as you think. Boats are not allowed to go closer than 50m from the animals and the best experience, I am told, is to get on the Zodiac boats with a lot of people for that. I don't know about you, but bobbing up and down at speed in an air filled plastic boat doesn't sound fun. Trips to the neighboring islands, which I hear are beautiful, are also three hours long, so prepare to either stay the night or spend six hours in total just going back and forth. But if you like the sea, all of these might be worth it.

Last warning is about the tourist traps. Funchal is basically a big tourist trap, where all the prices are at least twice as expensive as for the rest of the island. But even if you go to neighboring towns, there are the visible places people go to and then there are the places where the locals go to. The problem is not so much the price as is the inauthentic experience. You don't want to spend the time and resources to go to Madeira just to get the same experience you would get in any other city in the world, including the one you left from.

The trip

In order to get to the island, we took a charter plane as part of a touristic agency plan. There was no other direct plane from my city of Bucharest to Madeira and I suspect most travel there is being monopolized by deals between tour agencies and local hotels and airport. However, even if you don't have a lot of choice on where to stay, you don't have to follow the plan that the agency has for you when you get there, so we made our own plans.

The hotel we were stationed at was Four View Baía (pronounced Bah-ee-ah), which was a decent building with 11 floors, an outdoor pool and a spa complete with an indoor heated pool and sauna, and a pretty good view, too, as you got to see most of Funchal and the ocean, but next to busy noisy streets as well. The most valuable quality of the hotel is that it's 16+, so no kids at meals or in the pool, no noise during the night, etc. However the service was stupidly bad, with a restaurant that offered buffet meals, but would have the cheapest food that didn't even seem local and the lowest paid workforce, if you take into consideration how unprofessional many of them were. They didn't seem local either, BTW. The air conditioner, for example, looked centrally controlled even if you could set up your desired temperature. The result of turning it on, though, was just cold air at 16°C that would never stop. So our feeling was that it was a good hotel with shitty management. Good overall quality spoiled by inattention to detail and no care for the customer experience.

This could be explained by the Covid pandemic, though. When Covid struck, the entire island realized how dependent they were on tourism. I suppose the smaller businesses quickly collapsed, leaving just the big corporate chains with their unique mentality on cost reduction. But I may be wrong. Four Views hotels might just be bad in general.

I had the expectation to go to Madeira and eat fish and sea food the entire duration. It was amazing to me that most places didn't serve sea food that much and if they did, it was expensive and the diversity of the offer was pretty low, even in places outside Funchal. We haven't been to many restaurants, though, so we might just have been unlucky. Also, most of the "traditional" food they have in Madeira is really bland, which is surprising considering how much spice the Portuguese were transporting through the island. My recommendation towards the food is to go to the market, buy some chili and keep it with you at all times. Mustard, too, if you can.

We spent the first two days walking around, which has the level of difficulty of your choice depending on whether you walk around the ocean side, which is flat, or you go towards the center, which is really steep. We took the cable car to two large gardens, there were two of them, one the Botanical Garden and the other Monte Palace Tropical. We went to Monte Palace first, which was wonderful, with two small museums inside - African sculpture and Geological - and a beautiful and carefully cared for garden, tiles, Asian motifs and more. Only then we went to the botanical one, only to find that is was smaller and much less tended for. It was almost a disappointment, even if it had more types of plant life there. Personally, I thought Monte Palace Tropical garden was a lot more beautiful. Coming back we walked, which was funny as we took a 40 degree slope downwards on a street for an hour or so. Hint: cut your toenails before going to Madeira. Tried to find the street on Google Maps, but apparently not even they are adventurous enough to map it in Street View.

Second day we took the Hop-on bus to a nearby fisherman town called Câmara de Lobos. I liked it there a lot, as it has a big street filled with bars and restaurants, where they do serve local food and the local cocktails. The Poncha (pronounced Ponsha) was originally a combination of rum agricol (traditionally made by steaming sugar cane, not like the industrial rum which is most common today outside Madeira), lemon juice and sugar rubbed lemon zest. It tastes a bit like a gimlet and you find it under the name Pescador Poncha. However, the modern variation of the cocktail (called Traditional Poncha for some reason) is a combination of orange juice, rum agricol and honey. I do believe this one is the better version, as the tastes of orange, rum and honey mix very well together without covering each other up. Anyway, any combination of sugar, citrus juice and rum is a poncha and you can find many variants on the island. Note that there are bars and then there are poncha bars. The cocktail needs to be made fresh, don't get the bottles for tourists. The English word "punch" has the same etymological roots.

The next two days we had hired a local guide. I have to tell you that this was the best decision we made there (perhaps the second best, next to the one to not rent a car!). Not only did we skip the group tours organized by the tourist agency, but we also looked for a guide that would take only us around - there are others that do a similar thing, but with convoys of cars. We stumbled upon Go Local, run by Valdemar Andrade, who comes to your hotel in his trusty Nissan 4x4 and takes you to see Madeira in ways that only locals see it, then brings you back. I am talking various villages, some so isolated that they have rare contact with anybody, forest roads that no one knows about, restaurants and bars that give you the authentic Madeiran experience, volcanic black sand beaches, mountain top walks and so much more. Valdemar is also a very fun conversationalist, boasting immense pride in his island and knowledgeable about all aspects of Madeira, starting with history, politics, geography, flora and fauna, economy and ending with any small detail you can think of. It was great fun to visit the island from the open roof car, just the way I like it: long trips with a lot of nature, without any effort on my part :) If you decide to go, I warmly recommend him.

The fifth day we went on a hike next to one of these artificial water gathering channels called levadas. Recommended by Val, I think it may have been the best of them all: Ribeiro Frio - Portela - Levada do Furado (PR10). We looked for another one next day and didn't find any that were as long, beautiful or conveniently placed as it - relative to our hotel, of course. Imagine an 11km hike on a more or less flat path that goes around an entire mountain, under the shade of trees, with the cool of the water in the channel making it as pleasant a temperature as possible, with almost no annoying insects and with mountain vistas that take the breath away. We got there by Bolt and we returned from the other end with a local bus.

The last full day we went to the Blandy's wine tour, which is a Madeiran wine company tour on how the wine is made and bottled and which gives you the option to buy wine at a discount then grab it at the airport duty-free shop, which I found very convenient. The tour was nice, too, with a very pleasant guide explaining everything. Madeiran wine is a fortified wine, where strong alcohol is added to stop fermentation rather than wait until fermentation naturally reaches that level. It also has unique properties because of the preparation process, which emulates the effects of months long trips on the sea with wine barrels indirectly heated by the sun. It has a very interesting taste that I enjoyed, with intense flavors given by the wood in the barrels and reminding me a bit of Asian rice wines.

Then we went to the old city, a place of small streets filled with restaurants, but really trappy. Then we walked the ocean side in Funchal and the nearby beach after going there by Hop-on bus - the island doesn't have a lot of beaches, which I believe is a good thing.

There are a lot of details that I've left out, like the flight or the outdoor pool experience under the Madeiran sun and feeling the breeze, the Portuguese obsession with the sh sound (as in Poncha, Lobos, Seixal, etc.), the laws that prohibit logging or building above the 800m height, which I believe is amazing and should be perhaps implemented in some areas of my country as well or how the local (rather feudal) politics make access to the island by ferry difficult and so stealing on the island is almost non existent (where would you go with the stuff?), or the invasive species like the tobacco tree or eucalyptus which are in constant battle with the native flora and so on. Ask me if you want any specifics.

What I loved most about Madeira was the landscape and flora. Beautiful trees and flowers, pristine mountain slopes and, in the constructed areas, a common building style that didn't grate the eye. I also had the feeling that this might change in the future. I hope Madeira stays like this, but I feel like private interests are slowly but surely eroding the local culture and legislation. While we were there, there was construction everywhere, four large cranes visible from our hotel room alone.

More pictures