Temporary tables better than table variables!

Today I had a very interesting discussion with a colleague who optimized my work in Microsoft's SQL Server by replacing a table variable with a temporary table. Which is annoying, since I've done the opposite plenty of time, thinking that I am choosing the best solution. After all, temporary tables have the overhead of being stored into tempdb, on the disk. What could possibly be wrong with using a table variables? I believe this table explains it all:

First of all, the storage is the same. How? Well, table variables start off in memory, but if they go above a limit they get saved to tempdb! Another interesting bit is the indexes. While you can create primary keys on table variables, you can't use other indexes - that's OK, though, because you would hardly need very complex variable tables. But then there is the parallelism: none for table variables! As you will see, that's rather important. At least table variables don't cause recompilations. And last, but certainly not least, perhaps the most important difference: statistics! You don't have statistics on table variables.

Let's consider my scenario: I was executing a stored procedure and storing the selected values in a table variable. This SP had the single reason to filter the ids of records that I would then have to extract - joining them with a lot of other tables - and could return 200, 800 or several hundred thousand rows.

With a table variable this means :

- when inserting potentially hundreds of thousands of rows I would have no parallelism (slow!) and it would probably save it to tempdb anyway (slow!)

- when joining other tables with it, not having statistics, it would just treat it like a short list of values, which it potentially wasn't, and looping through it : Table Spool (slow!)

- various profiling tools would show the same or even less physical reads and the same SQL server execution time, but the CPU time would be larger than execution time (hidden slow!)

This situation has been improved considerably in SQL Server 2019, to the point that in most cases table variables and temporary tables show the same performance, but versions previous to that would show this to a larger degree.

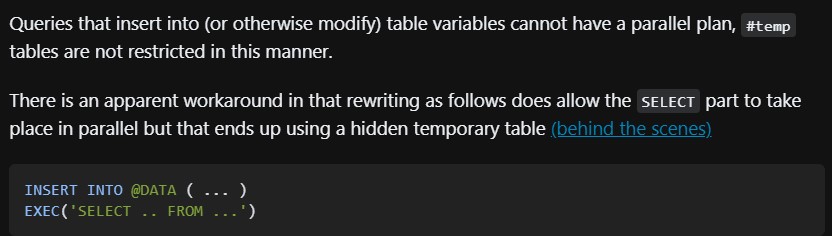

And then there are hacks. For my example, there is reason why parallelism DOES occur:

So are temporary tables always better? No. There are several advantages of table variables:

- they get cleared automatically at the end of their scope

- result in fewer recompilations of stored procedures

- less locking and resources, since they don't have transaction logs

For many simple situations, like where you want to generate some small quantity of data and then work with that, table variables are best. However, as soon as the data size or scenario complexity increases, temporary tables become better.

As always, don't believe me, test! In SQL everything "depends", you can't rely on fixed rules like "X is always better" so profile your particular scenarios and see which solution is better.

Hope it helps!

Comments

Be the first to post a comment